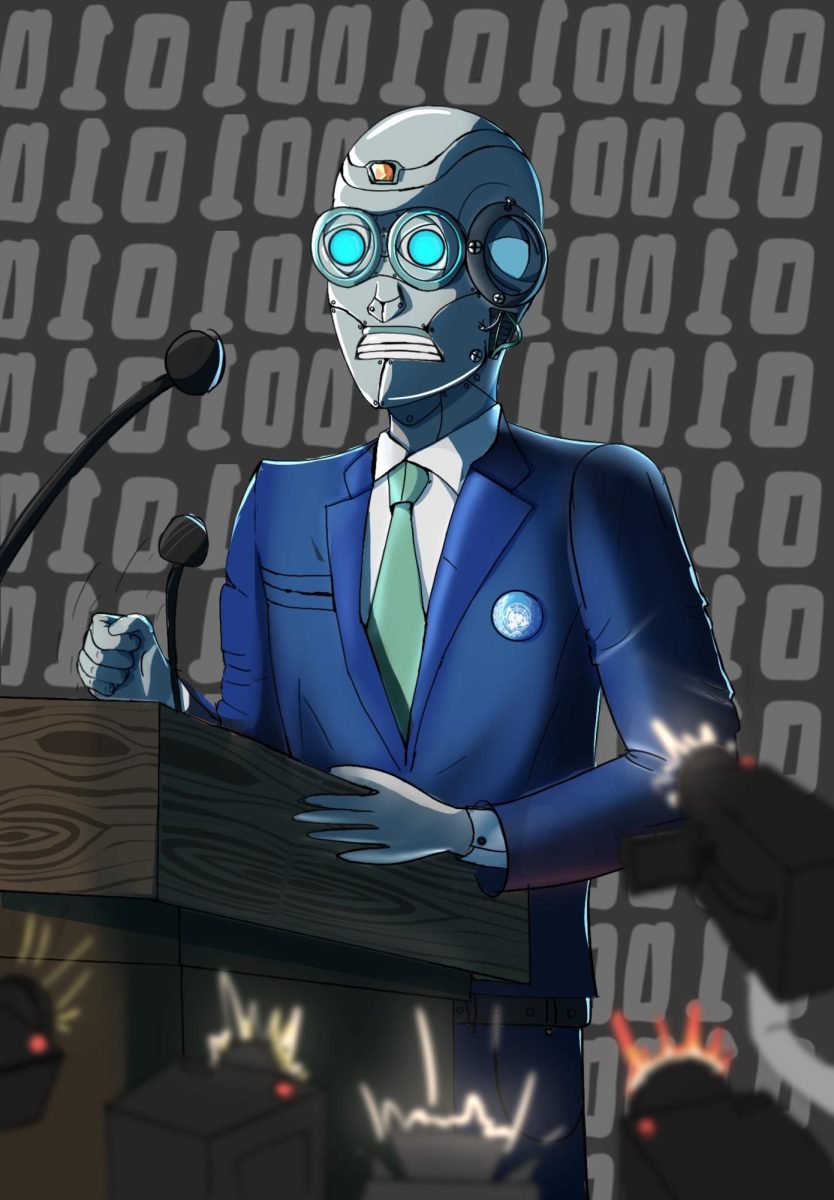

A debate around AI’s ethicality has been ongoing for decades, but recent advancements have brought both its potential and challenges into sharper focus. AI’s integration into helping world issues has the power to significantly benefit humanity.

According to the AI for Good initiative, led by the United Nations’ International Telecommunication Union, AI could help achieve 135 of the UN’s Sustainable Development Goals, which address critical issues like poverty, environmental sustainability and inequality. Unfortunately, the progress in achieving these goals has been slow because of their magnitude. Thus, AI’s ability to accelerate efforts could be revolutionary.

AI is already making climate modeling faster and more accurate, as seen with projects like Destination Earth, and tools like FireAid are improving how experts predict and respond to wildfires. In education, AI-powered virtual tutors can adapt lessons to fit each student’s needs, which is especially helpful in under-resourced areas. In healthcare, AI is improving disease diagnosis and patient care with tools like wearables and image recognition systems. For poverty reduction, AI can analyze data from satellites and other sources to pinpoint areas in need and help direct aid more effectively.

There are, however, notable concerns as AI systems can unintentionally amplify existing social biases, particularly around race and socioeconomic status. Prejudice is a risk for organizations like the United Nations, which often work with vulnerable populations who need UN intervention. If AI models are trained on biased data or used without proper oversight, they can end up reinforcing harmful stereotypes – such as equating Africa with poverty, or associating darker skin tones with negative traits.

The trouble is that biases can be difficult to spot and even harder to correct once they have taken hold because someone needs to scour numerous amounts of data. According to Harvard Business Review, AI provides training data that misrepresents certain minorities, which can shape societal perceptions and influence real-world decisions in damaging ways by exacerbating negative biases.

When addressing problems with AI, a crucial step is ensuring that its systems are transparent and explainable. The key to using AI responsibly is to take a thoughtful, ethical approach, especially in settings such as the workplace, or public services.

AI systems need to be transparent and easy to understand so that people affected by their decisions can challenge or correct them if needed. When decisions made by AI are understandable to employees and other stakeholders, they can be questioned, corrected or improved as necessary. Explainable AI techniques are essential in areas like hiring or promotions, where outcomes can have significant personal and professional impacts.

Ultimately, using AI responsibly involves more than just technical solutions – it requires a commitment to transparency, accountability and fairness at every stage of development and implementation. By following these principles, organizations can harness AI’s transformative power while minimizing the risks of unintended consequences.